How Public Cloud ate private hosting

Let me start with a confession: Professor Fréry, I was wrong.

As part of my MBA’s strategy course I had to write a strategic diagnostic of my company. As any former student of Prof. Fréry will tell you, this is a brutal and extremely demanding assignment, easily 3 to 4 weeks of full-time work. The first and most crucial part of the assignment is to split the company into strategic business units. An SBU is basically the only unit it makes sense to study from a strategic perspective. Anything smaller, and you don’t have a full-fledged entity. Anything bigger, and you’re mixing different contexts (customers, competitors, channels, resources, etc.) and your analysis makes no sense. So it’s crucial to define your SBU correctly, or the rest of the diagnosis is flawed.

Guess what? I got it wrong.

At the time, I was working for a managed service provider of public and private cloud solutions. How to segment the company came down to this question: should public and private cloud services be considered separate strategic business units?

The criteria to identify an SBU are:

- does it have specific customers?

- does it address specific markets?

- does it have specific competitors?

- does it leverage specific technologies?

- does it require specific resources?

- does it have specific sales channels?

Applying these criteria didn’t give a clear-cut answer, but I decided that, in the company’s context, public and private cloud businesses belonged to the same SBU.

It’s only a few years later, when I read The Innovator’s Dilemma by Clayton Christensen, that I understood how wrong I’d been.

The Innovator’s Dilemma

It’s hard to summarize a book1 in a single paragraph, so if you haven’t read The Innovator’s Dilemma, I can only encourage you to do so. That being said, here is my attempt at a summary (if you’re already familiar with the book’s thesis you can skip directly to how it applies to cloud computing).

The book aims to answer the question “Why do new technologies cause great firms to fail?” through numerous case studies in different industries. To understand the book’s thesis, it’s worth taking some time to qualify what Christensen means by “great firms” and “new technologies”.

Great firms

In the book’s context, a great firm is one that is well-managed and efficiently organized to pursue high-value opportunities and meet its customers’ demands. This means that such a company will:

- seek to maintain or increase revenues and margins, and therefore

- prefer higher-margin products to low-margin products,

- prefer larger markets to smaller or unknown markets;

- work within its value network (meaning its network of suppliers and customers) to evaluate and request new features from their suppliers, and surface new needs from their customers;

- focus on and improve product features which are most valuable to its customers;

- build processes, incentives and a company culture that enforce all the traits listed above.

New technologies

Christensen distinguishes two kinds of innovation, sustaining and disruptive.

Sustaining innovations are the next wave of an S curve. They bring continuing improvement to a given market, i.e. they improve products along the qualities that matter to the existing value network. An example of a sustaining innovation from the disk drive industry (which Christensen analyses extensively in the book) is the introduction of magneto-resistive heads, which improved reading speed and disk density –two traits prized by the existing disk drive customers.

Market leaders manage to ride sustaining innovation waves, no matter how technologically complex, because a sustaining innovation ticks all the boxes of what great firms are good at:

- it brings improvements which are sought after by their customers;

- it can bring larger market share and improve margins;

- it can give access to higher-margin markets (more on this later);

- all this happens in the company’s existing value network, which means customer demand is known and management can build a solid business case and prioritize the project.

Disruptive innovations, on the other hand, do not bring improvements that the existing customers value; they do, however, have qualities which can make them a good fit for a different market. An example of a disruptive innovation from the disk drive industry is drives with a smaller form factor (e.g. going from 5.25-inch drives to 3.5-inch) but with less capacity than existing drives. The new 3.5" drives were of no interest to the existing market: makers of desktop computers had no need for the smaller form factor, seeking instead improvements in storage and speed. However, the new drives’ smaller size was an asset in the new (at the time) notebook market.

Disruptive innovations are often technologically straightforward, reusing well-known tech. As such, it is extremely common for market leaders to experiment with (or even invent) the innovation, and to then discard it for business reasons: their existing customers don’t want it, there’s no clear market for it, etc.

This means that disruptive innovations initially thrive in adjacent markets, which value different qualities. In this context, a disruptive innovation’s “flaw” can actually become an advantage. To take another example from the book, when hydraulic back-hoes first appeared they were dramatically underpowered compared to the cable excavators of the time: they had smaller buckets and less reach, which made them unusable for the large construction sites and public works that were the existing market for excavators. However, their small buckets and small size (which made them more maneuverable and easier to move from one dig to the next) made them a very good fit for residential work and sewage projects which were previously dug by hand.

It hasn’t escaped you that we don’t see 5.25-inch drives anymore, and that hydraulic excavators have conquered the construction market. This is the central thesis of the book: disruptive technology eventually displaces the previous wave of innovation.

How does this happen?

Technology supply outpaces market demands

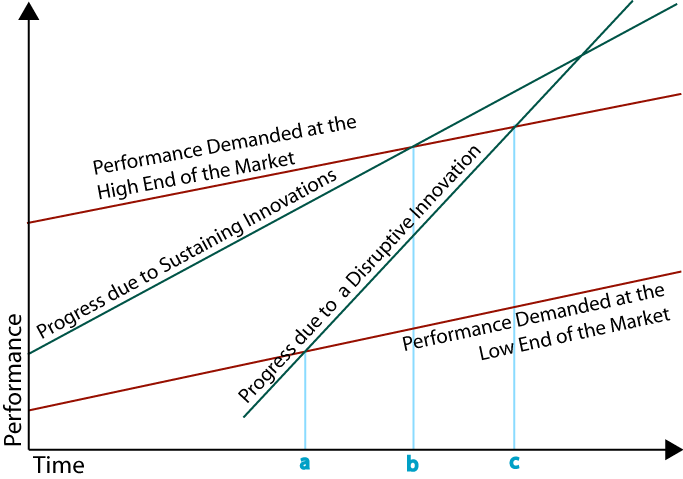

The final piece of the puzzle is that innovation progresses faster than market demand. That is, a given innovation wave (i.e. series of sustaining innovation) will add performance faster than the market requires. This is illustrated in the graph below by the fact that the green lines (innovation growth) grow faster and eventually outgrow the red lines (market demand).

Technology progress vs market demand (source)

Let’s illustrate this graph with our previous example of 5.25" drives (first green line) and 3.5" drives (second green line), in the desktop computer market (red lines).

| Time | Desktop PC market demand | 5.25" drive capacity | 3.5" drive capacity |

|---|---|---|---|

| a | 200 - 400 MB | 350 MB | 200 MB |

| b | 250 - 500 MB | 500 MB | 350 MB |

| c | 300 - 600 MB | 700 MB | 600 MB |

Before point a, 3.5" drives simply don’t have enough storage to be of interest to PC makers. Let’s say desktop computers need between 200 MB (low end) and 400 MB (high end) of hard drive capacity. 5.25" drives provide (on average) 350 MB of storage, right in this range. The newer 3.5" drives, on the other hand, can only provide 150-200 MB of storage.

At point b, incremental improvements to 5.25" drives bring their average capacity to 500 MB, enough to service the high-end of PCs, which now require 250-500 MB of storage. 3.5" drives have also progressed (faster than 5.25" drives!) and now offer 350 MB: enough to service the lower half of the market.

At point c, most PCs on the market offer between 300 and 600 MB of storage. 3.5" drives have progressed enough to completely fill this need, while 5.25" technology, reaching 700 MB, has overshot it. At this point, PC makers have no incentive to choose 5.25" drives over 3.5" drives on the original criterion of storage, since both technologies more than meet market demands. Instead, other factors such as price and form factor come into play and tilt the balance in favor of 3.5" drives.

When this cycle is complete, the disruptive technology has usually completely overtaken the original market.

Market leaders race to the Northeast…

How does this play out from the perspective of a market leader in the existing technology?

When the disruptive technology emerges, as we saw previously it is evaluated and rejected by market leaders, for business reasons. The new technology is left to develop in another, smaller market, usually one with lower margins.

The market leaders will instead ride the current innovation wave, offering steadily improving products to their customers. At some point, their products become good enough to target a higher-margin market, and the companies, being great firms, will focus their marketing, commercial, and engineering efforts on conquering this new market. This is what happens after point b for the sustaining innovation: its performances allow it to target the market “one step up”; in our 5.25" drive example, this might be high-end workstations.

The disruptive innovation will of course know its own upward trajectory: from the initial, smaller market, it will eventually reach the original market (this is what happens at point a), bringing with it companies used to survive on lower margins. At this point, the market leaders have the choice between fighting on price for the lower end of the market, or retreating to the larger margins of the high-end market. Of course, the choice is clear-cut: the market leaders will “race to the Northeast”, i.e. seek to improve their products and move on to the higher-end market, letting the disruptive innovation conquer all of the original market.

…and the disruptors chase after them

This pattern repeats as the disruptive innovation enters market after market, chasing after the incumbents. Christensen gives the example of mini-mills in steel production. At first mini-mills could only provide low-quality steel, suitable only for rebar. The incumbents (integrated steel mills) were happy to leave this low-margin market to the newcomers. But as mini-mills improved, they cornered the market for larger bars and rods, then structural beams. Meanwhile the integrated steel mills concentrated on higher-market, higher-margin products, such as sheet steel… until another disruptive innovation allowed mini-mills to enter the sheet steel market. While this innovation initially yielded lower-quality sheet steel than traditional methods, it caught up within a few years, allowing mini-mills to displace integrated steel mills across the entire steel production market.

Back to Cloud computing

We’re now ready to illustrate how this process played out in Cloud computing, and how public cloud more or less completely displaced private cloud.

Cloud computing (specifically, Infrastructure as a Service) is, at a very high level, the ability to rent computers rather than own them2. Cloud computing allows companies to turn capital expenditures (purchasing hardware, building datacenters, etc.) into operational expenses, and to delegate engineering problems with low business value but high criticity (datacenter security, power and connectivity redundancy, hardware procurement…) to a supplier which specializes in them.

To make a non-tech analogy, using Cloud Computing services is akin to renting office space rather than building and maintaining your own buildings.

There are two flavors of Cloud computing: public clouds and private clouds. To continue the office analogy, public clouds are the equivalent of hot-desking in a co-working space: you’re seated in an open space and you take whichever desk is free when you get to the office. You pay by the day, and the prices are low.

But what if you always want the same desk? What if you’d like some privacy and the ability to leave some stuff on your desk? In this case, most co-working spaces will rent you a closed office, for a higher price, that you will pay by the month whether you use it or not. This closed office is the equivalent of a private cloud.

My assertion (as the co-working analogy might have tipped you off) is that private cloud is a sustaining innovation, while public cloud is a disruptive one.

Private cloud is a sustaining innovation

At the heart of Cloud computing’s rise is a technology called virtualization, which makes it possible to run several virtualized computers (called virtual machines or VM) on a single physical server, allowing for large economic and operational gains. It is common to have 10 to 20 VMs running on a single server, which massively optimizes hardware costs. Provisioning a new physical server can take hours (if you have a spare one in stock) to weeks (if you need to order one); by contrast, spinning up a new VM takes minutes.

Before virtualization, managed service providers already hosted and managed servers for their corporate customers. They were quick to adopt the new technology, which enabled them to streamline operations and use hardware more efficiently. Further technological advances enabled the virtualization of storage, network, and security equipment and the gradual replacement of expensive, custom appliances by commodity servers. The end result of applying these sustaining innovations to the private hosting market is private cloud: highly-optimized, massively virtualized infrastructure dedicated to a customer’s IT needs, balancing high quality hosting, price flexibility and operational agility.

Private hosting (and later, private cloud) customers were companies, often large ones, with well-structured IT departments and specific IT needs. The servers (and later, VMs) would host critical company services: email, CRM, ERP… These services, in turn, would have very specific requirements in terms of infrastructure. Therefore, the table stakes for private hosting were quite high: providers needed to accommodate specific hardware; support specific software, among which Windows Server and the Microsoft suite of services3; ensure that servers would keep running or, in case of a failure, that finicky applications could be reliably restarted without losing critical business data; and a host of other requirements. Installing and configuring a new server was a long and fiddly process, which virtualization only partially alleviated. In a common industry metaphor, servers were pets: each was unique, each required ongoing grooming and attention, and losing one was a pain.

Public cloud is a disruptive innovation

Amazon Web Services, the poster child for public cloud, launched its first VM service EC24 in 2006. Xen, the virtualization technology used by EC2, was launched in 2001; VMWare, another major virtualization company, was founded in 1999 and launched their flagship product in 2001. In other words, by the time EC2 launched virtualization was well established –remember, disruptive innovation often uses well-known tech.

When it first launched, the offer was limited and fell far short of the requirements for corporate IT. VMs could go down at any time, and when you turned them back on, they would have a different address and your data would be gone; for licensing reasons, Windows and RedHat Linux (the main corporate operating systems of the time) were not supported… and the list goes on. For the first 2 years of its existence, the service was officially in beta and offered no service level agreement5. In other words, the first public cloud offer was woefully inadequate for the traditional corporate hosting market.

And indeed, AWS’s first customers were not established companies; they were startups, research labs, small teams and individuals, who could work around these constraints and for whom the low cost and flexibility of these early services were paramount. The archetypal early adoption scenario was a nimble, cash-poor company (or a small department within a large company) using AWS to spin up some VMs, process a large amount of data, and turn off the VMs, at a fraction of the cost and time it would have taken with a traditional IT organization. This use case is about as far as you can get from the dominant private hosting use case: running a rigid corporate IT system with stringent availability and data integrity requirements.

Technology supply outpaces market demand

You can guess what happened next: public cloud got better… and better… and even better.

Features and services were added at a steady clip, enabling AWS (and later, the other public Cloud companies) to catch up to the corporate market expectations, and then overtake these expectations with such features as multi-region hosting with automatic failover –something which was historically complex and very costly to achieve with private hosting.

AWS now offers more than 500 services, covering the entire scope of hosting and then some: there are 17 ways to run a container (a smaller version of a VM) in AWS);there’s a quantum computing research service, another one to communicate with satellites and of course you can always order a truck full of hard drives.

Reliability also improved. A significant portion of the Internet (including this blog) now runs on public Cloud and, while there are incidents, the major Cloud players’ track record concerning availability is very good.

At the same time, the initial flakiness of public Cloud spurred software engineers to harden their application designs, leading to what is known as Cloud Native Applications. These architectural patterns make the application layer more robust (whereas previously this robustness was expected of the underlying hardware) and allow the application to take full advantage of public Cloud services. Therefore, what was initially a weakness of public Cloud transformed into a competitive advantage for companies who could leverage it6. Servers are no longer pets, but cattle: all similar, with serial numbers rather than names, made to be herded at scale and killed when the time comes.

(As an aside, it is much more efficient to run an industrial farm than a kennel: public cloud companies have a much, much lower ratio of employees to servers than traditional managed service providers)

The same story applies to the all the other early limitations of public Cloud. On security, compliance, regional footprint, public Cloud services went from laggard to best in class. Meanwhile, they built on their historical strengths (pricing, APIs, flexibility and scalability) to define new table stakes that the existing hosting providers found hard to match.

And so public Cloud progressively conquered the corporate hosting market, eventually convincing even the most demanding customers (financial institutions, governments) to jump on the bandwagon.

Meanwhile, in private hosting

When it became clear the public cloud was becoming a threat, the managed service providers were quick to jump in the fray. Most hosting providers, as well as hardware vendors (HP, IBM) and software vendors (VMWare, Oracle), tried their hand at public cloud for corporate customers. In other words, the market’s biggest and most experienced companies, with large customer bases, and strong sales and engineering teams (remember that VMWare literally built the underlying technology!) attempted to pivot to public cloud.

Less than a decade later, only OVH (a large European hosting provider) and Oracle are still players to any meaningful degree in the hosting market.

What happened? In a nutshell: these great firms tried to tackle public cloud as an evolution of the private hosting business. They came to this new market with their existing sales channels, a cost structure and margins inherited from the B2B world, and their corporate customers’ expectations in terms of features and quality of service. On the other side of the ring was AWS, a notoriously frugal company with a large head start (in a market where volume drives a lot of price efficiency) and who single-handedly defined the expectations around Public Cloud. They tried to compete according to the rules and market they knew rather than the reality of this new ecosystem, and they lost.7

A couple of anecdotes to illustrate this: I vividly remember long debates between our product team, who was trying to emulate AWS’s roadmap, and the sales team, who insisted they couldn’t sell the product to our existing customers if we couldn’t support applicative backup and all the other “corporate IT” features they were used to. I also remember that our first business case was built on the assumption that the new customers would be similar to our existing customer base, and this business case drove a lot of engineering decisions. Spoiler: it turns out that we had more customers than expected… but that they were much smaller, and so we had to redesign a lot of our architecture to adapt it to a vastly different business model.

The end result

Whereas 10 years ago public Cloud was a small part of the overall hosting market, it now commands the lion’s share of revenue, with private cloud fast becoming anecdotal. The market is dominated by 4 companies who did not come from the hosting or managed service business: Amazon Web Services, Microsoft Azure, Google Cloud, and Alibaba Cloud. This result is due to the extraordinary rate of improvement of public Cloud offers, and to the inability of the incumbents to break out of their value network and adapt to a disruptive innovation.

In other words, it all played out exactly as described in The Innovator’s Dilemma.

Back to the beginning

So, how does all of this relate to my strategy assignment?

As I hope I have made clear, it makes no sense to manage a disruptive innovation the same way you manage a sustaining one. And indeed, this is one of the answers that Christensen gives to the innovator’s dilemma: to harness a disruptive innovation, an incumbent should spin off a separate entity whose size and nimbleness are better tailored to the uncertainty and conditions of the new market.

Given the above, it does not make much sense to put public and private clouds in the same strategic business unit!

However I hope this post illustrates how difficult it is to make this distinction from the inside. It is easy for incumbents to rationalize the disruption as just a technological shift that their value network, internal organization and market knowledge will help them “catch up” to, when in fact these historical strengths are a liability in the new market.

As a matter of fact, it’s not until I read The Innovator’s Dilemma that I fully understood this, even after spending a significant amount of time thinking about the market and the company’s strengths and weaknesses.

So, Professor Fréry, I admit my mistake; I should have split the company into 2 SBUs. But it’s a mistake I’m glad I made; I wouldn’t have spent as much time thinking (and writing!) about disruptive innovation if I had gotten it right the first time.

Resources

Here are some of the links I drew my summary of the book on :

- https://fourminutebooks.com/the-innovators-dilemma-summary/

- https://innovationzen.com/blog/2006/10/04/disruptive-innovation/

- http://www.2ndbn5thmar.com/change/The%20Innovators.pdf

- https://www.semanticscholar.org/paper/The-Innovator's-Dilemma-Christensen/ca3698315441292596205d44d1a775d9cfc3fe37

- https://medium.com/quortex/moving-from-the-moores-law-to-bezos-law-33dbd7ecc5e1

- https://www.researchgate.net/publication/278329408_The_Innovator's_Dilemma/link/557f8d1508aeea18b77966d6/download

-

a good book, at any rate. As the old saw goes, in every best-selling management book there is a decent blog post. ↩︎

-

This is of course an over-simplification. Cloud computing providers offer an extremely wide range of services, but it ultimately comes down to renting (sometimes extremely sophisticated) hosting services rather than building them in-house. ↩︎

-

At the time, an unavoidable part of corporate IT life, but one requiring much fiddling to work properly. ↩︎

-

Short for Elastic Compute Cloud. AWS service names are notoriously bad. ↩︎

-

a service level agreement or SLA is IT speak for “we will give you a small voucher and pretend to be contrite if our service is unavailable” ↩︎

-

Which companies? Applying a new architecture pattern is easy if you’re starting from scratch; doable if the software is developed internally and the team is nimble; much more complicated if you have legacy software or are relying on a vendor. So this advantage accrued to the initial public Cloud customers (start-ups and small teams) but was nigh impossible to leverage by corporate IT teams using 3rd-party software. ↩︎

-

There’s a military term for this: “fighting the last war”. ↩︎